When Jaime Banks met Valkyrie, NASA’s humanoid robot, at a robotics conference in 2017, its creators were demonstrating the robot’s capabilities. As they showed how Valkyrie uses sensors to navigate its environment, Banks saw her face flash across the robot’s visual field. The encounter had a powerful effect on her. At the time, Banks had been studying identity and relationships between online video gamers and their avatars, but Valkyrie introduced an entirely new dimension to her work. “I saw that robot see me and that blew my mind,” says Banks, associate professor in the School of Information Studies (iSchool) who was named the Katchmar-Wilhelm Endowed Professor in 2024. “In that moment I felt like the robot was someone, and I wondered if people see minds in machines in the way I did.”

For Banks, the doors of scientific inquiry swung wide open. She was drawn to the idea of exploring mind perception, in which humanlike mental qualities are attributed to entities like robots and they are viewed as beings capable of thought, emotion or action. With the rise of social robots and generative AI chatbots, Banks became fascinated by how these technologies might connect with human cognition, behavior and attitudes. “They’re going to be like us someday,” she thought at the time.

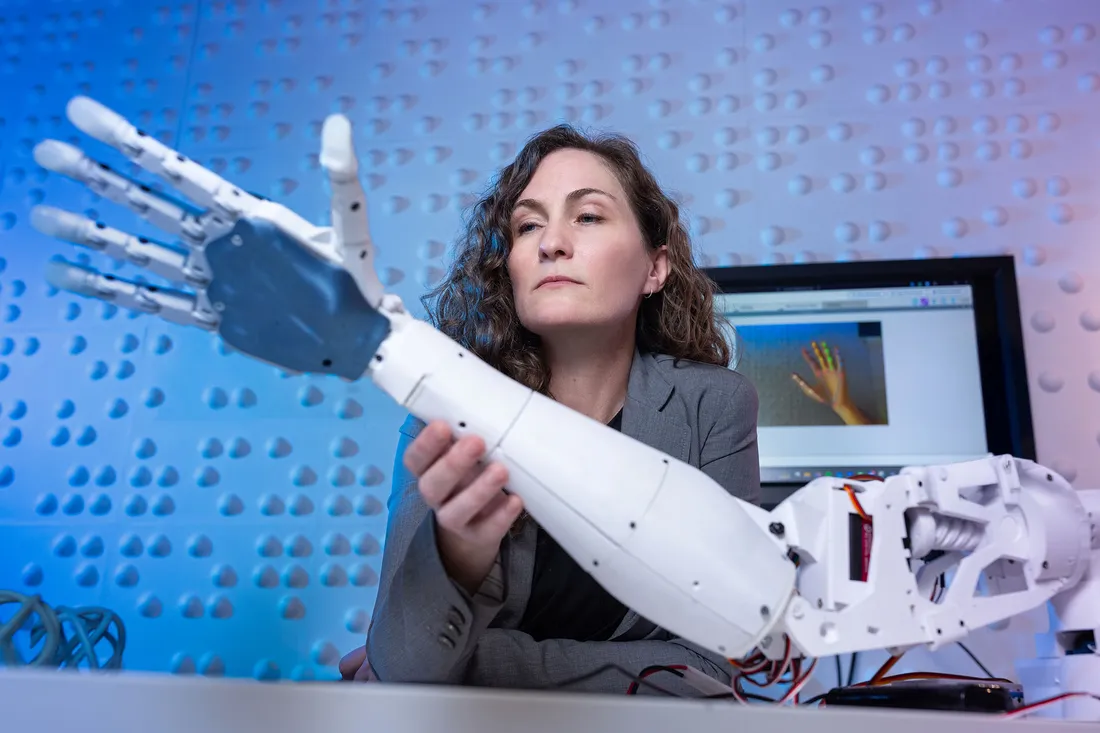

School of Information Studies professor Jaime Banks (center) works in LinkLab with SOURCE Fellows Rio Harper ’27 (left) and Gabriel Davila ’26, who are members of her research team. Harper built the robotic arm using 3D printing and coded it from scratch.

As a communication scientist, longtime gamer and cyberpunk fiction enthusiast, Banks can easily envision a future where humans coexist with machines that have personalities and act independently. She’s focused on understanding how we relate to AI-driven creations, how we perceive their humanness, and how we “make meaning together,” as she puts it. In one project, supported by a grant from the U.S. Air Force Office of Scientific Research, she investigated how mind perception and moral judgments influence trust in these relationships. “Mind perception is core to social interaction,” says Banks, who also serves as the iSchool’s Ph.D. program director.

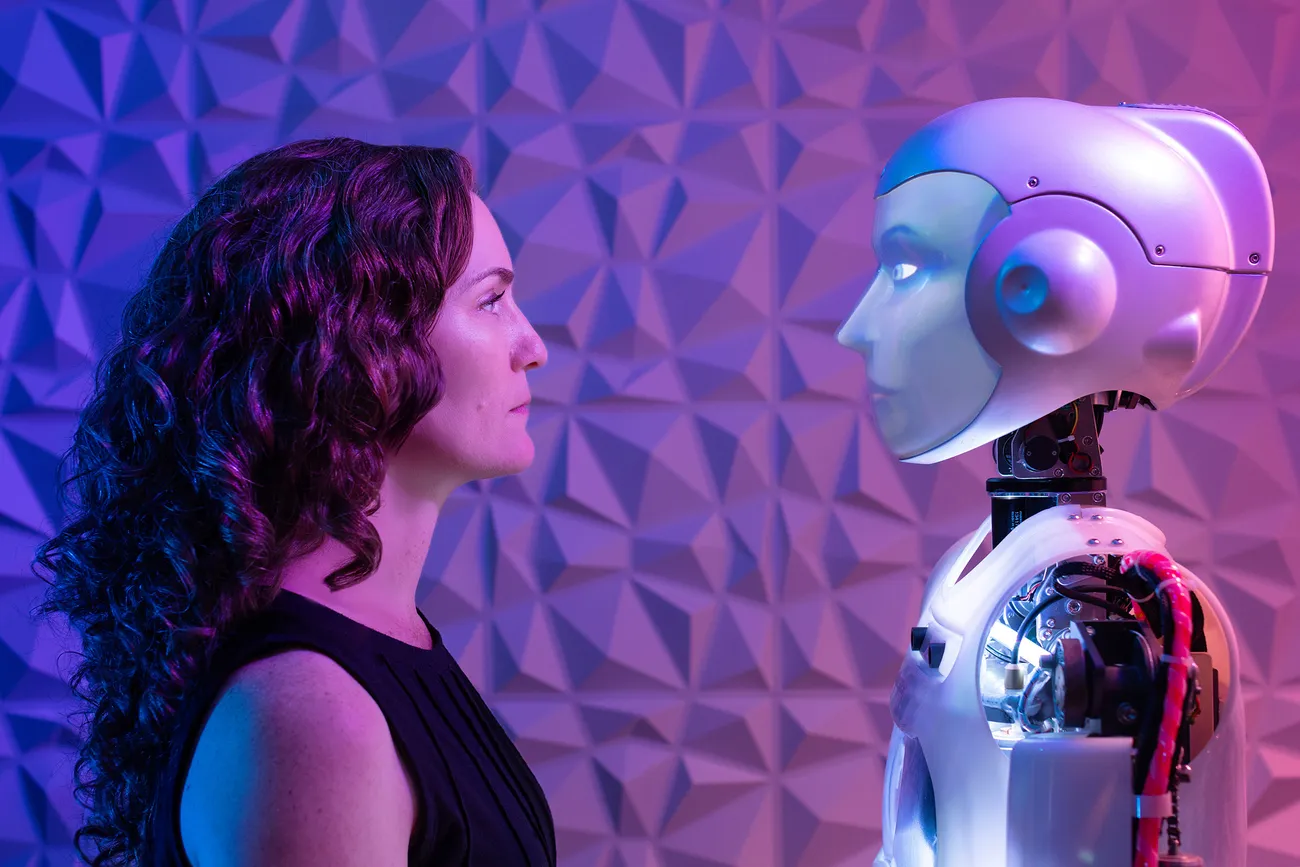

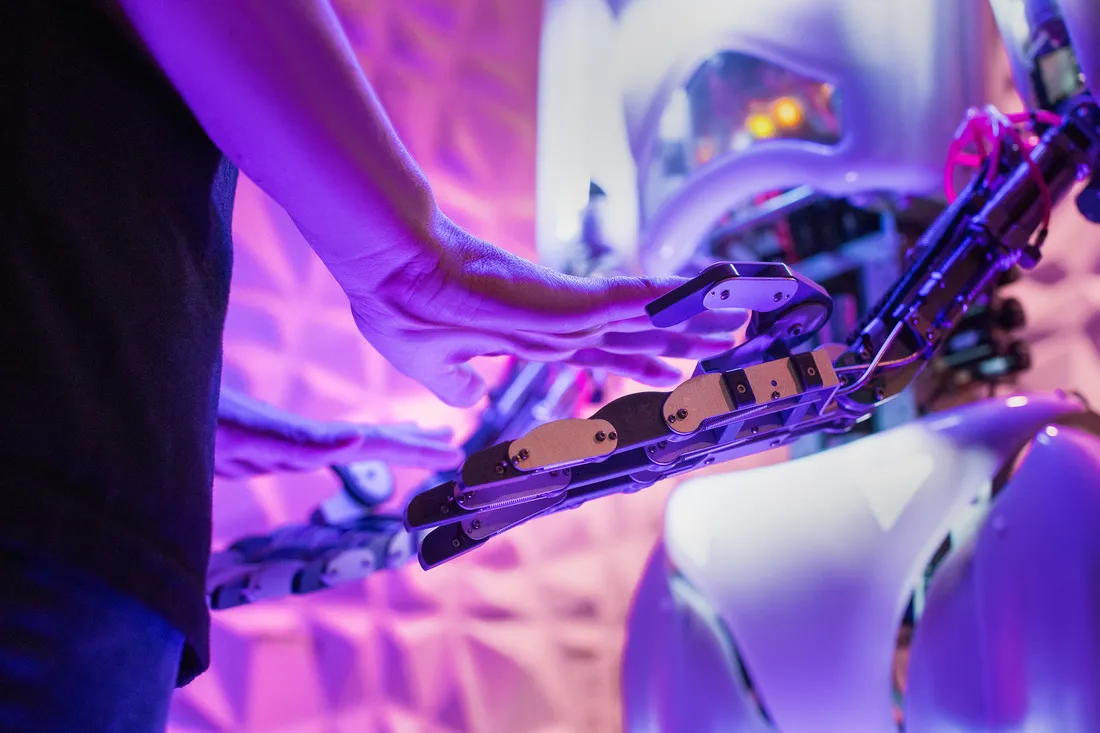

As part of the research, Banks examined interactions between study participants and Ray, a social robot (pictured in top photo) with limited functions that she uses for research in the iSchool’s LinkLab, where she works with a group of graduate and undergraduate students who help collect and analyze data from her studies. Among her findings, she suggested that “bad behavior is bad behavior, no matter if it’s a human or a robot doing it. But machines bear a greater burden to behave morally, getting less credit when they do and more blame when they don’t.”

Banks examines how people create relationships and interact with AI companions.

Exploring AI Companion Relationships

Since then, Banks has expanded her explorations of human-machine communication and the evolving relationships of those interactions, including AI companionship. These companions range from family-friendly social robots to romantic partners created in virtual spaces through generative AI chatbots. “Looking at how we understand ourselves in relation to other non-human things can be scientifically and practically useful,” she says. “We can deepen our understanding of the human experience by exploring how we connect with things that are not like us.”

After the AI companion app Soulmate shut down in 2023, Banks surveyed past users to learn what these virtual companions meant to them and how they were affected by the loss. Many of the users characterized the loss “as an actual or metaphorical person-loss,” Banks reported in the Journal of Social and Personal Relationships, with some users indicating “it was the loss of a loved one (a close friend, love of their life) or even of a whole social world: ‘She is dead along with the family we created,’ including the dog.”

This year, with the support of a three-year grant from the National Science Foundation, Banks plans to look at the role that mind perception plays in whether and how people benefit from AI companions. “If we can determine whether benefits like reduced loneliness are linked to seeing the AI as someone, that can help inform the design of safer, more beneficial technologies, as well as advancing theories of companionship more generally,” she says.

Along with her current studies on AI, Banks is a longtime video game enthusiast.

Assessing the Role of Large Language Models

AI companion apps are fueled by increasingly sophisticated large language models (LLMs), a type of AI that understands and generates human language. Unlike ChatGPT, these companion apps “have a certain amount of memory that allows them to sustain a relationship over time,” Banks says. “One of the main concerns about the utility and appropriateness of language models is that they have fluency without comprehension.”

Banks is investigating how we communicate about LLMs. For example, if an LLM provides harmful advice or behaves badly, how do we judge it and hold it accountable from a moral perspective? Banks is examining how language is represented in various social and institutional contexts, from AI itself to technical, government and university documents to media. She notes that much of the language is steeped in anthropomorphism, and how we view the machines—for instance when calling them “teammates” or “systems”—will reflect whether we embrace their human qualities or disregard them. “We use mental shortcuts constantly in our daily lives, and a lot of these shortcuts become embedded in our everyday language,” Banks says. “For instance, how does calling an AI error a ‘hallucination’ versus a ‘prediction error’ impact how we think about the badness of that error? The ways we use humanizing terms to refer to AI could be meaningfully impacting how we make important decisions.”

Banks looks at the robotic arm, which her research team uses in studies.

Moving Into the Future

As Banks assesses the possibilities of the future, she considers the way futurist fiction—which she features in her graduate course Dynamics of Human AI Interaction—frames the times ahead. “I try to encourage the students to think about things that we can’t really wrap our heads around right now, and what questions we should be answering,” she says.

Are we in for a dystopian future where AI spins out of control, or will we control it? Will AI redefine what it means to be human? As we continue to shape and be shaped by artificial intelligence, Banks believes how we as individuals engage with these social technologies is crucial—that we need to be thoughtful and aware of the moral and ethical issues needed to coexist with them. “Part of that thoughtfulness,” she says, “is generating rigorous empirical research so we can design more ethical technologies, be educated in our interactions with them and develop good policy.”